From Memes to Mainstream: How Far-Right Extremists Weaponize AI to Spread Antisemitism and Radicalization

Abstract

In response to the recent events of October 7th, far-right extremists individuals are deliberately aiming to exploit mainstream discourse to sow extremist ideology and radicalize users. The findings of this report are based on our monitoring of online far-right extremist channels. These extremists exploit anti-Israel sentiments to disseminate antisemitic content on mainstream platforms to radicalize individuals across the ideological spectrum. This report specifically focuses on how utilization of AI has facilitated innovation in these far-right strategies.

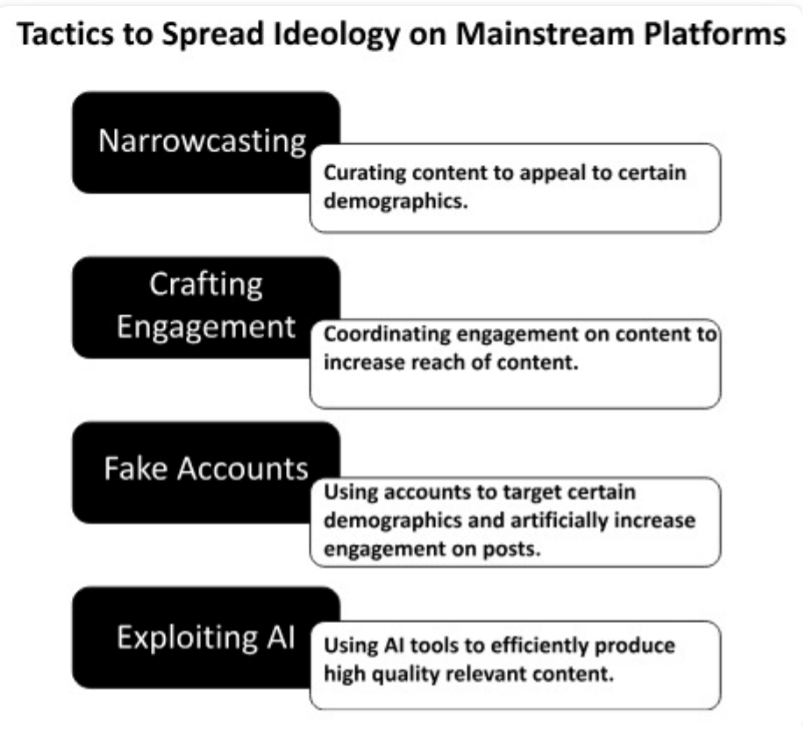

The report is divided into two parts. In the first part, Hijacking Anti-Israel Discourse to Promote Antisemitism and Radicalization, we show how users are coordinating meticulous strategies meant to influence people by taking advantage of current anti-Israel sentiments and disseminate antisemitic content on mainstream platforms. The intention is to normalize far-right ideologies and narratives among mainstream discourse, with the ultimate aim of radicalization of users. We present four tactics in the far-right modus operandi that are apparent in this campaign: (1) Narrowcasting, (2) Crafting Engagement, (3) Fake Accounts (4) Exploiting AI for Meme Creation.

These tactics are part of an increasingly growing technique of “meme warfare,” wherein extremists utilize memes to spread harmful ideologies. Memes normalize extremist beliefs, cloaking harmful ideologies in a legitimizing humorous form. We show that this notorious far-right technique is being innovated and intensified thanks to new Generative AI technologies.

The second part of this report, Disseminating AI images beyond far-right spaces, illustrates the use of “meme warfare” and the fruition of these ambitions through the examination of four case studies: (i) Brooklyn Synagogue Tunnel, (ii) Attacks by the Houthi terrorist organization in the Red Sea, (iii) Modern Propaganda Posters, and (IV) Fire Extinguisher Iconography. These case studies demonstrate the groups’ successful strategic planning, leading to maximized reach and resonance with mainstream audiences, ultimately exposing at least tens of millions to implicit dangerous narratives and stereotypes.

Introduction

Following the events of October 7th, there has been a concerning phenomenon emerging where far-right extremists are seizing upon the situation to radicalize various groups. Through our daily monitoring of both encrypted and unencrypted channels associated with online extremist communities, a troubling pattern has emerged: Extremist individuals are actively exploiting the circumstances to disseminate antisemitic content on mainstream social media platforms, aiming to radicalize individuals with differing ideologies, whom they derogatorily refer to as “normies.” They view the ongoing events as an opportunity to advance their agenda.

This adoption of AI technology underscores a key point outlined in our paper titled “Navigating Extremism in the Era of Artificial Intelligence.”[1] We emphasized how far-right extremists are actively seeking to utilize AI-generated content for political purposes. Since the publication of our report, there has been a noticeable uptick in the far-right’s utilization of AI technologies for this objective. This trend is facilitated by the increased availability of more sophisticated generative AI(GAI) tools specifically tailored to conservative users.

This paper offers an analysis of the specific tactics employed by far-right extremists in their efforts to radicalize others, focusing particularly on their engagement in “meme warfare.” Our monitoring efforts have revealed a discernible pattern in the increasing use of AI-generated content for strategically disseminating information to broader, mainstream audiences, aligning with the far-right’s tradition of employing “meme warfare.”[2]

This tactic serves as a means to “redpill”[3] seeking to subvert mainstream narratives and propagate harmful beliefs while disguising them within seemingly innocuous forms — memes. Memes, typically comprising amusing or attention-grabbing content like captioned images or videos, spread virally across the internet, primarily through social media platforms. They come in various forms, including videos, images, or GIFs, and gain momentum through replication and widespread sharing.[4]

While the concept of meme warfare has historical roots, notably in the Soviet Union’s ‘dezinformatsiya’ campaigns, it has gained renewed potency in the digital age. The internet facilitates the widespread dissemination of deceptive content on an unprecedented scale. Despite the evident power of memetic warfare, Western nations have been relatively slow to develop their capabilities in this domain. Calls for institutions like NATO to establish dedicated meme warfare centers have circulated since at least 2006, yet concrete initiatives have not materialized. Nevertheless, interest in leveraging memes for strategic purposes persists as a form of information warfare.[5]

By exploiting the viral nature of memes, extremists disseminate content that, at first glance, may not be immediately recognized for its harmful implications. This approach poses a significant danger, as it can normalize or trivialize the seriousness of violent extremist ideologies.[6] This content is also known as borderline content,[7] essentially content which does not violate the rules of social media platforms but inherently possesses dangerous or extremist ideology. The danger lies in the passive consumption and dissemination of such content, which, when not actively condemned or questioned, becomes implicitly normalized within public discourse. For some individuals, this may even mark the initial stage in their journey towards radicalization as part of the alt-right pipeline.[8]

The report is divided into two parts. In the first part, Hijacking Anti-Israel Discourse to Promote Antisemitism and Radicalization, we show how users are coordinating meticulous strategies meant to influence people by taking advantage of current anti-Israel sentiments and disseminate antisemitic content, with a particular focus on TikTok and X (formerly Twitter). The intention is to normalize far-right ideologies and narratives, with the ultimate aim to radicalize. We analyze four tactics used and discussed as part of a far-right meme warfare modus operandi: (1) Narrowcasting, (2) Crafting Engagement, (3) Fake Accounts (4) Exploiting AI for Meme Creation.

The second part of this report, Disseminating AI images beyond far-right spaces, illustrates the use of “meme warfare” and the fruition of these ambitions through the examination of four case studies: (i) Brooklyn Synagogue Tunnel, (ii) Attacks by the Houthi terrorist organization in the Red Sea, (iii) Modern Propaganda Posters, and (IV) Fire Extinguisher Iconography

Part 1: Hijacking Anti-Israel Discourse to Promote Antisemitism and Radicalization

Far-right activists employ a central strategy of exploiting both global and local events to further their own agendas, with a primary aim of redpilling – radicalizing various groups by interposing far-right ideology into their respective discourse. For instance, following the US presidential elections, where Trump’s supporters felt disenfranchised, far-right extremists capitalized on this and sought to radicalize them.[9] Similarly, during the COVID-19 pandemic, they exploited the anti-vaccination movement to radicalize users and spread antisemitic conspiracy theories.[10]

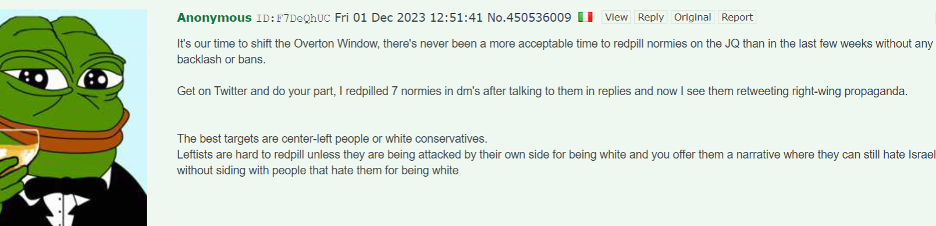

Our current monitoring indicates that following the events of October 7th, far-right extremists are capitalizing on the situation to radicalize various groups. Extremist users have been actively exploiting the situation to spread antisemitic content on mainstream social media platforms with the intention of radicalizing individuals with differing ideologies, whom they refer to as “normies.” They perceive this time as opportune and pivotal to achieve their agenda of spreading their ideology.

(4chan; February 10, 2024)

The following tactics that will be exposed are deeply interconnected and are not mutually exclusive nor sequential. The tactics are: (1) Narrowcasting (2) Crafting Engagement (3) Fake Accounts (4) Exploiting AI for Meme Creation

The posts that will be shown often contain more than one of the tactics explored, exemplifying the consolidation of a fundamental modus operandi for far-right meme warfare.

Tactic # 1: Narrowcasting

Social networking platforms enable terrorists and extremists to employ a targeted approach called narrowcasting. The process involves curating content and messaging towards specific segments of the population customized to attune by their values, preferences, demographic attributes, or memberships.[11]This is illustrated with the post below, by a user on a neo-nazi board, strategizing ways to sow antisemitic conspiracies and rhetoric in Black online spaces.

(Far-right Board; January 19, 2024)

One user calls for shifting the Overton window[12], claiming that “there’s never been a more acceptable time . . . than in the last few weeks”(referring to the Israel–Hamas war) to “redpill normies[13] on the JQ[14]”. The user encourages others to target and redpill center-left and white conservative users on X. The strategic exploitation of current political tensions to drag the Overton window, demonstrates a deliberate effort to normalize antisemitism.

On one extremist imageboard, we observed a discussion on exploiting anti-Israel sentiments[15] amongst young people (Gen-Z) to subversively plant antisemitic beliefs. Users shared and discussed AI-generated antisemitic talking points and strategized dissemination tactics. One strategy discussed to reach the desired Gen-Z audience was creating 1-minute clips cut from neo-Nazi propaganda films and sharing them on short video platforms like TikTok and Youtube Shorts.

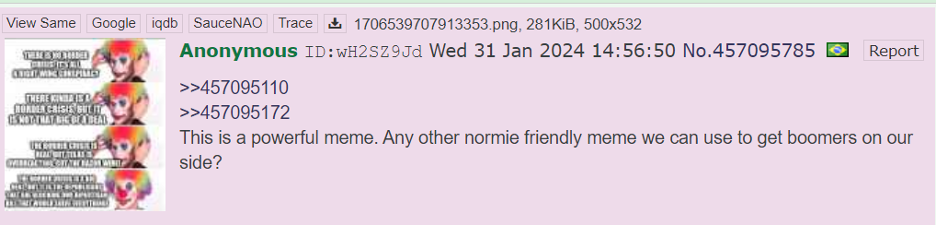

Some users explicitly strategize how to target age demographics, like the post below discussing effective memes for radicalizing baby boomers — the generation of individuals born between World War II and the 1960s.

(4chan; January 31, 2024)

Tactic #2: Crafting Engagement

Users are strategizing ways to make their “redpills” more legitimate, one of the tactics for this is to drive and craft engagement on their content.

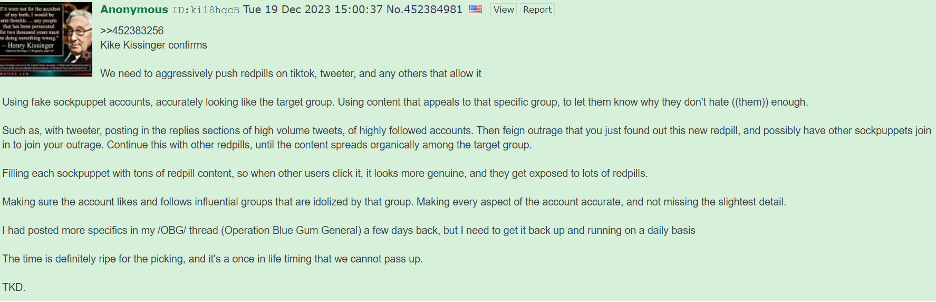

In the following post, a user on 4chan says that “the time is definitely ripe for the picking, and it’s a once in life timing[sic]”. He explicitly outlines a strategy for users to create fake accounts on platforms like X and TikTok to share “redpills” about Jewish people.

The user explains strategies of dissemination to get high engagement by replying to highly followed accounts and using other fake accounts to boost the content. The user also emphasizes to tailor each account to fit in with the intended audience, and to use content that appeals to the group which would most effectively convince them of the veracity of the antisemitic messaging.

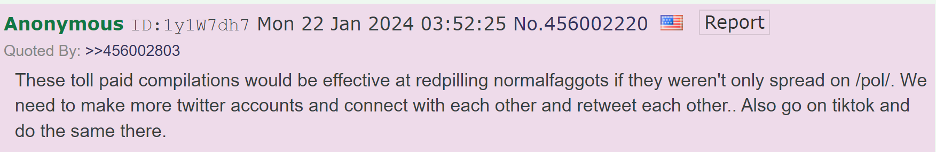

Users encourage one another to boost their content using fake accounts on platforms like X and TikTok. This is explicitly done to spread redpills and boost engagement on content originating from far-right sources.

(4chan; January 22, 2024)

(4chan; October 28, 2023)

Tactic #3: Fake Accounts

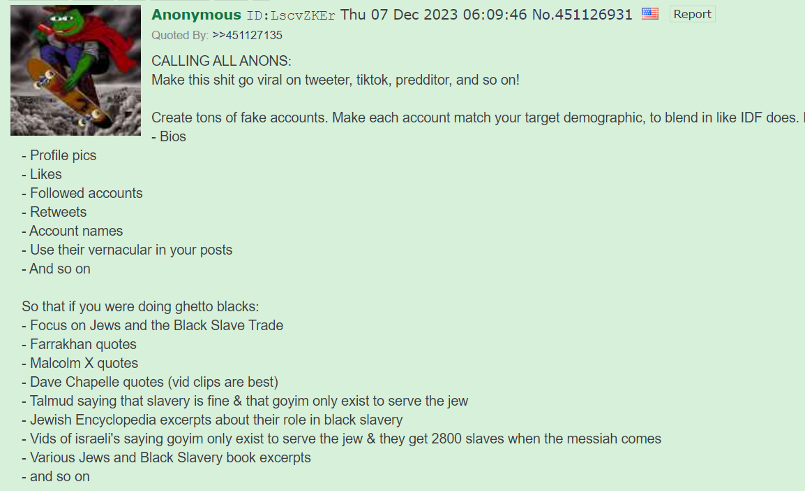

Users explicitly encourage creating fake accounts for the purposes of narrowcasting and crafting authentic engagement.

In the following post, a user shares a strategy of building convincing fake accounts on X, TikTok, and Reddit. The strategy specifically instructs users on narrowcasting tactics with a tailored account and content strategy for the demographic being targeted. The post contains many antisemitic talking points to specifically target the Black community with.

Tactic # 4: Exploiting AI for Meme Creation

Central to meme warfare is content creation. Far-right extremists have been enthusiastically exploring the potential for AI to streamline this process. Mainstream GAI tools are consistently being exploited in order to create offensive and harmful content.[16][17] In recent influence campaigns among the far-right, the use of AI has been increasingly central to their efforts.

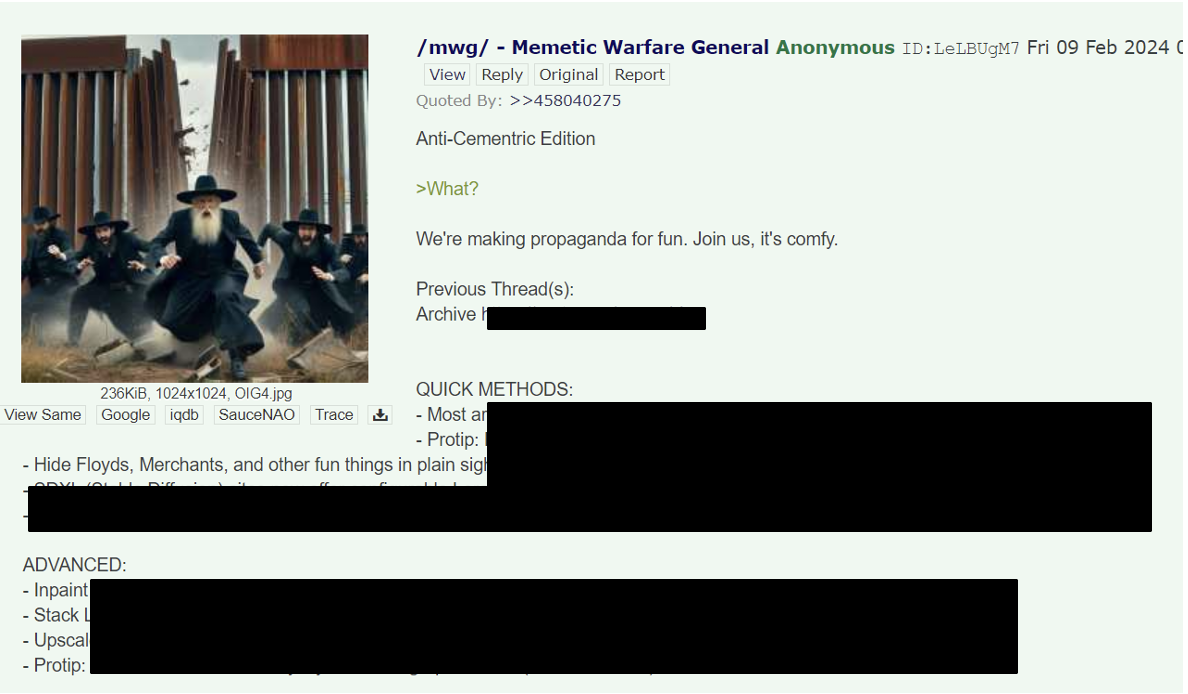

There is a frequently-posted tutorial which explains how to use AI to generate images for the purpose of “memetic warfare”, claiming “if we can direct our autism and creativity towards our common causes, victory is assured” and encouraging users to share their generated images “beyond the site.” This acts as an explicit call to action to spread AI-generated images containing hateful imagery, which users perceive as an effective and persuasive[18] means to radicalize others. This tutorial is a copy-paste that has appeared at least 500 timeson 4chan boards as part of /mwg/ (Memetic Warfare General) threads where users have been discussing and collaborating on their AI-generated images, tactics, and strategies.

(4chan; February 9,2024)

In our previous report, we noted that users are frustrated with restrictions on GAI tools and are seeking “uncensored bots”.[19] We have noted an increase in availability of AI tools that are designed for the far-right communities.

(4chan; January 4,2023)

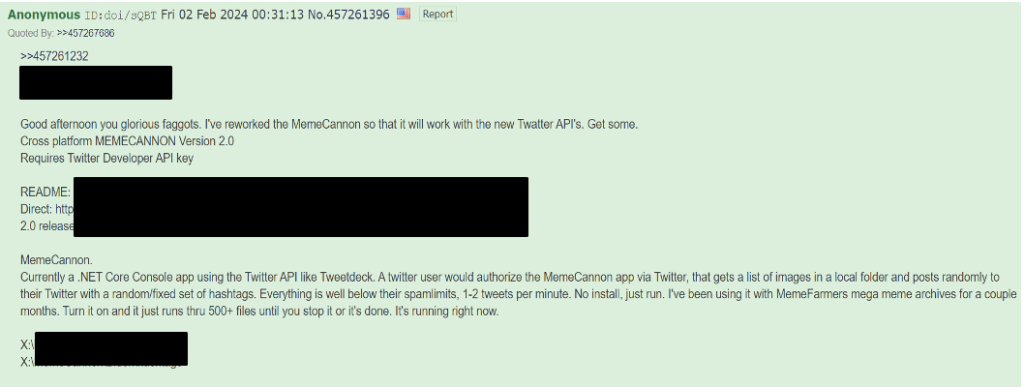

Users are also developing bespoke software for meme warfare. This is exemplified by tools like the “Meme Cannon”, which allows users to automate posts from X accounts at a rate of 1-2 tweets per minute.

(4chan; February 2, 2024)

Discussions on Legality, Moderation, and Safeguards

We observed many discussions by users concerned by the legality of certain meme warfare tactics. The goal of these discussions was often to understand what actions can be done with no legal repercussions.

(Endchan; February 8, 2024)

Many users are also frustrated by moderation on social media platforms, where they often get banned for sharing extremist content. We observed many tutorials explaining how to bypass bans on social media platforms, like X.

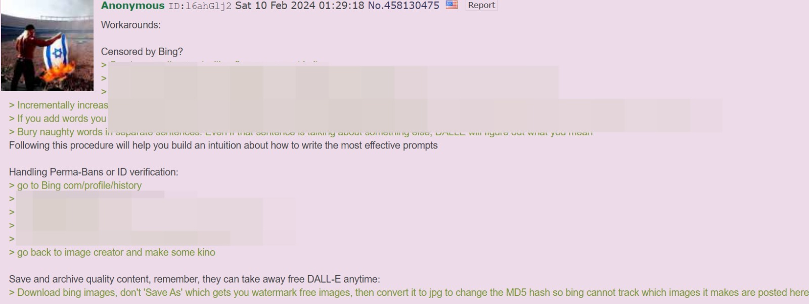

This need extends to GAI as well, with users explaining how to bypass their accounts getting banned, and even requirements of ID verification. This is part of a larger trend we noted in our previous report, where far-right users strategize ways to bypass safeguards in mainstream AI tools in order to generate harmful content.[20]

(4chan; February 10,2024)

Sometimes the frustration of moderation on social media platforms leads users to transition their ideological dissemination to physical spaces.

(4chan; February 10, 2024)

Part 2: Disseminating AI images beyond far-right spaces

In the following case studies, we demonstrate how far-right users are exploiting GAI to create antisemitic and harmful content aiding their various purposes. Some of this content then spreads to mainstream platforms, gaining engagement with millions of users.

(i) Brooklyn Synagogue Tunnel

On January 8th, news coverage of a tunnel being found under a Brooklyn synagogue became a big topic of discussion among extremists online. Far right influencers heavily promoted antisemitic tropes and conspiracies that the tunnels were used for child trafficking and pedophilia.[21] Central to these theories was a mattress taken from the tunnel that had an unknown stain on it. Within hours of first coverage of the event, far-right users rapidly began generating images using AI tools (largely from Midjourney, OpenAI and Microsoft, as well as open-source), and sharing them.

Some of the images were memes, dehumanizing Jews and depicting them in severe caricatures as rats and demons in tunnels. Others were more realistic, depicting bloody mattresses, trafficked children and Jews escaping tunnels.[22] Many of these images were posted with the goal of spreading them widely, being shared on Telegram channels dedicated to mainstream social media distribution, and users on 4chan sharing operational strategies to maximize reach of their images.

(4chan; January 11, 2024)

(ii) Attacks by the Houthi terrorist organization in the Red Sea

Since the Israel-Hamas war that broke out as a response to Hamas’ October 7 massacre, the Houthis[23] have been targeting in the Red Sea, one of the world’s vital maritime trade routes, any commercial ship associated with Israel. As a consequence of these Houthi attacks, major shipping and oil companies have been compelled to halt transit through this area. This situation raises concerns that the conflict between Israel and Hamas could expand into a broader regional crisis.

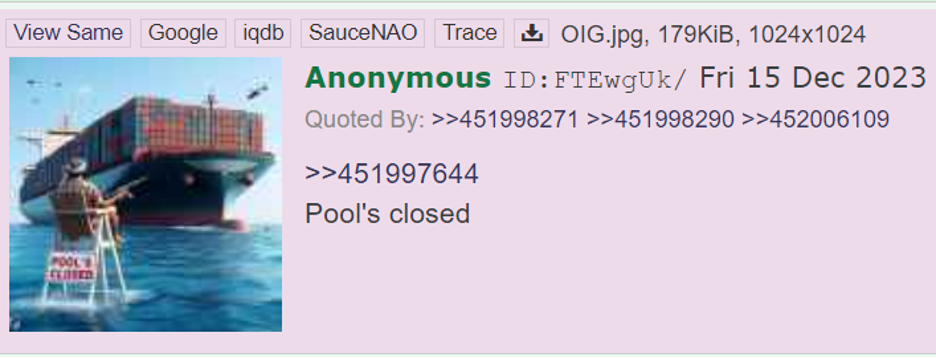

Onset of these attacks, far-right extremists have begun crafting AI-generated images, mostly applauding the actions of the Houthis. One example being the portrayal of a combatant halting a ship. The image , crafted by far-right extremists utilizing AI, was primarily circulated in praise of the Houthis’ actions. It’s depicted humorously, incorporating a reference to the racist “pool’s closed”[24] meme. Originally published on 4chan, on December 15, 2023, the image quickly spread across mainstream platforms such as Twitter and TikTok.

(4chan; December 15, 2023)

A week after it was posted, users on 4chan were celebrating as the meme garnered hundreds of thousands views across various platforms.

(4chan; December 22, 2023)

Jackson Hinkle[25], a social media influencer with 2.4 million followers on platform X, stands out as one of the leading commentators discussing the Israel-Hamas conflict on the same platformHis account, which is noted for sharing misinformation and antisemitic conspiracy theories, was allegedly the X account with the most views in October 2023.[26] Less than a month after the meme first appeared on 4chan, Hinkle tweeted the AI generated image, where it was viewed over 1.2 million times by users. Three days later, Hinkle tweeted that he “stands with Yemen’s Houthis”.

(X; January 10, 2024)

This instance illustrates how far-right users exploit the Israel-Palestine discourse by generating content that indirectly garners mainstream support or validation for the Houthis—a group designated by the US as a terror organization known for its homophobic, misogynistic, anti-American, and antisemitic ideologies.[27]Minimization and legitimization of a group like the Houthis, which notoriously has “Death to Israel” and “Damn the Jews” as part of its slogan[28], is inherently antisemitic.

(iii) Modern Propaganda Posters

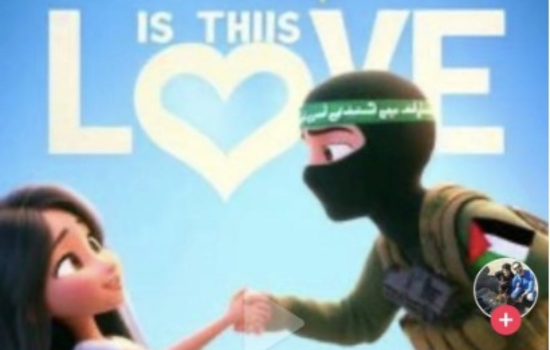

During November 2023, parody Disney and Pixar posters created using GAI were widely distributed as memes.[29] Far-right boards were filled with posters for fictional animated movies with offensive themes, such as Adolf Hitler, African-American stereotypes, antisemitism, HIV, 9/11, and more.[30]

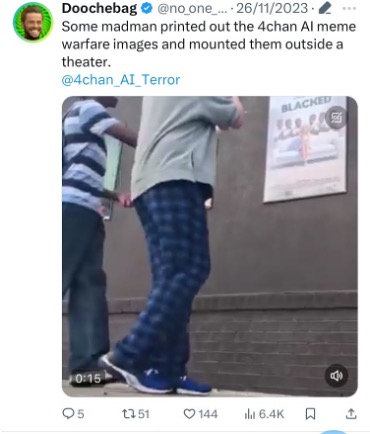

In one instance, a Youtuber who posts prank videos —a genre often associated with prioritizing shock value over thoughtful content creation[31] — printed posters generated by an AI originating from 4chan that depicted offensive themes such as racism, misogyny and hate groups, and displayed them in physical spaces, inadvertently blurring the line between online and offline propaganda.

(X; November 26, 2023)

Videos of the “prank” were posted on TikTok and Youtube. Where on Youtube they received over 800,000 cumulative views and on TikTok, 13.4 million views cumulatively on just four clips. Viewership of this magnitude, along with a high proportion of positive feedback (e.g. likes), leads to desensitization to harmful aspects of the content, and can even transform to a positive perception through social influence.[32]

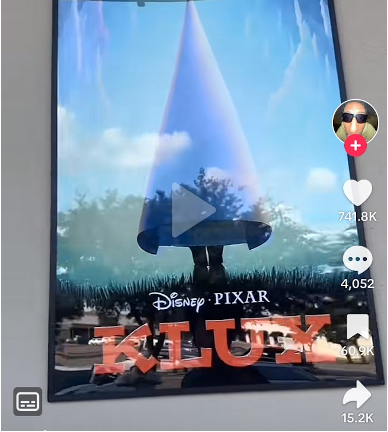

(TikTok; November 16, 2023)

(TikTok; November 5, 2023)

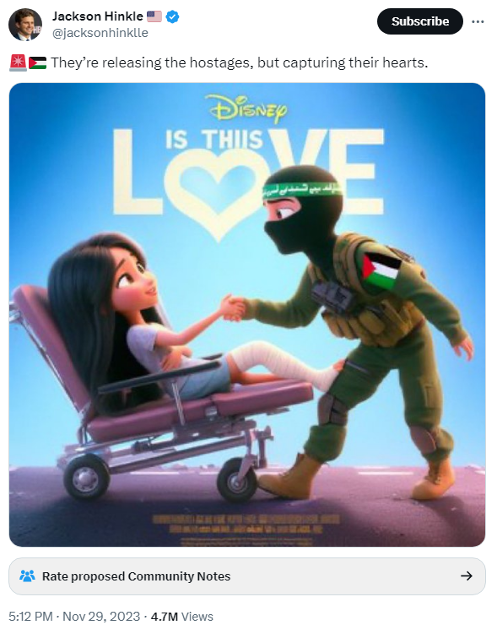

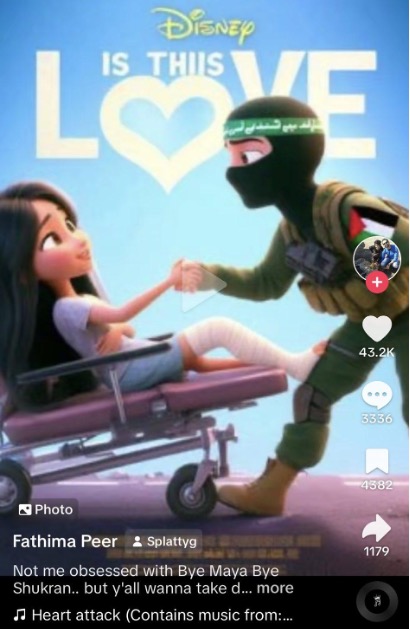

Despite safeguards implemented in November 2023 to block copyright infringement[33], far-right users managed to bypass them in order to create more parody posters. One such example is a meme featuring Maya Regev, an Israeli hostage held by Hamas in Gaza following the attacks on October 7, 2023. She was released on November 25 and brought to hospital for surgery for gunshot wounds. A pixar poster mocking Maya Regev was generated by a user on 4chan, depicting her in a romanticized dynamic with her Hamas captor. This image became widely distributed, particularly on platforms like X and TikTok. Jackson Hinkle himself also posted the image, where it gained over 4.7 million views.

(X; November 29, 2023)

(TikTok; December 3, 2023)

This instance illustrates the objectives of “meme warfare,” where dehumanization is veiled under humor, allowing the image to spread widely and reach a large audience while still conveying its harmful implicit message. By presenting the content in a positive or humorous light, users inadvertently normalize the minimization and desensitization toward victims of terror and may even implicitly legitimize the terror group involved. By adopting familiar meme formats, far-right users effectively exploit mainstream pro-Palestinian sentiments to further their own agenda, promoting the legitimacy of antisemitism and a terrorist organization, as well as perpetuating the dehumanization of Jewish people

These posters have emerged as an highly effective tool for redpilling, appealing to a broad audience with their superficial yet offensive “black comedy.” The viral spread of these posters is alarming, particularly as research indicates that perceived popularity or acceptability of content through endorsement metrics, such as like counts, are enough to shift one’s political attitudes.[34] Desensitization is a crucial lens through which to analyze this phenomenon, as repeated exposure to hateful content tends to desensitize individuals and may even lead to increased prejudice,[35] particularly when presented in a seemingly acceptable and positive light. Moreover, partisan biases influence perceptions of harm and desensitization, with hateful content aimed at outgroups often seen as less harmful.[36] This vulnerability amplifies the impact of “meme warfare” on minorities, as others may become more easily desensitized to the harmful elements of such content.

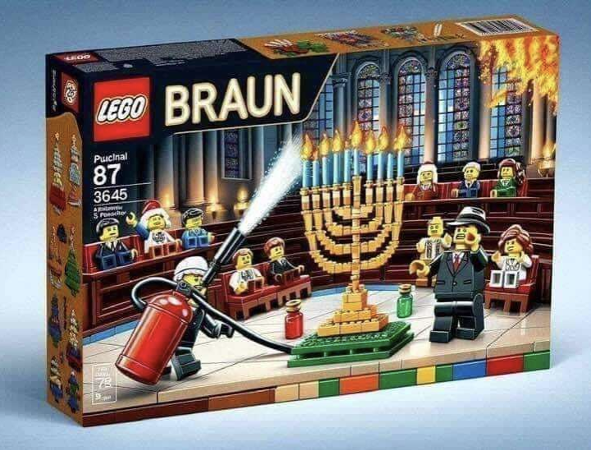

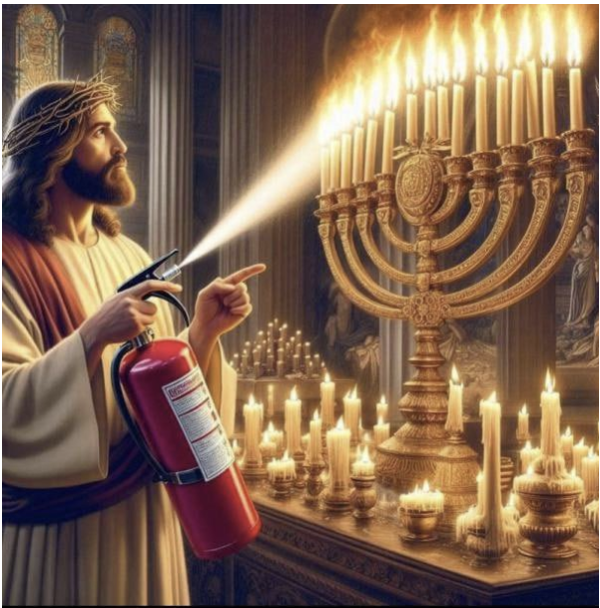

(IV) Fire Extinguisher Iconography

On December 12, 2023, a far-right Polish lawmaker extinguished Hanukkah candles at a ceremony for the Jewish community in the Polish Parliament. This act was celebrated within far-right circles, applauding the politician. The fire extinguisher, central to this incident, became an antisemitic symbol, termed as a “universal sign of the resistance to Jews”[37] by Lucas Gage, a prominent far-right figure. This symbol gained prominence after widespread dissemination, initially generated by Far right extremists utilizing an AI and later adopted by wider far-right circles. The rapid adoption of such symbols is facilitated by GAI, allowing the far-right to quickly propagate a plethora of memes and images reinforcing their association with extremist ideologies.Users shared AI-generated memes, such as the one below, featuring an anthropomorphized fire extinguisher depicted as Adolf Hitler. One particularly notable image portrayed a fictional Lego set, showing a Hanukkah menorah being extinguished by a Lego minifigure equipped with a fire extinguisher. The Lego minifigure was humorously named “Braun,” a reference to the antisemitic Polish MP Grzegorz Braun. This trend of AI generated lego sets gained traction in late 2023.[38]

A user on 4chan exploited this trend and generated the antisemitic lego set described. A reverse image search indicated that the set has spread wide, returning hundreds of results, appearing on Facebook, X, Tiktok and even Linkedin multiple times.

(Gab; December 21, 2023)

(4chan; December 12, 2023)

The fire extinguisher, as wielded in the AI generated figure of Jesus, becomes a metaphor for the far-right’s crusade against what they perceive as the corrupting influence of Judaism, framing themselves in a type of battle between good and evil, thereby elevating the moral authority of the cause and the symbol.

(Telegram; December 17, 2023)

(4chan; January 12, 2024)

The deliberate integration of this symbol into the far-right digital identity serves a purpose of reinforcing cohesion. The AI-generated memes depicting the fire extinguisher—ranging from cosplaying as unified Roman legions fighting Jews, to integration with Third Reich symbolism — project a desire for collective identity under a unified mission.

Individuals tend to strongly favor adopting group symbols as means to convey an intimidating and unified front.[39] Far-right users perceive the power of the fire extinguisher symbol in conveying solidarity and strength, leveraging AI to project that. The fire extinguisher making the group appear as a more unified, competent entity, the symbol serves to attract and retain members, meeting a range of psychological needs from belongingness to reducing uncertainty.[40]

(X; December 31, 2023)

In essence, the fire extinguisher symbol’s emergence and reinforcement through AI technologies illustrate the sophisticated utilization of AI in the service of group identity formation and mobilization. This is critical to establish an appearance which is most appealing to the prime demographic that tends to join far-right communities.[41]

Conclusion

In this paper we highlight the strategies that are taken by extremists following the October 07 to disseminate antisemitic content on mainstream platforms to radicalize individuals across the ideological spectrum.

Our monitoring revealed that far right extremists on alternative platforms are coordinating meticulous strategies for influence campaigns to disseminate antisemitic content on mainstream platforms, with a particular focus on TikTok and X (formerly Twitter). with the ultimate aim to radicalize. In the first part of the paper we analyze four elements in the far-right modus operandi that are very apparent in this recent campaign: (1) Narrowcasting, (2) Crafting Engagement, (3) Fake Accounts, and (4) Exploiting AI for Meme Creation.

(1) Narrowcasting:

Social networking platforms enable extremists to target specific segments of the population through narrowcasting, tailoring content to align with their values and preferences. Discussions in far-right spaces reveal strategies to hijack online discourse surrounding the Israel-Hamas war among specific demographics and interpose curated antisemitic arguments. Extremists discuss tactics like creating short videos on TikTok to reach Gen-Z, or curating antisemitic arguments that appeal to the African-American community.

(2) Crafting Engagement:

Extremists strategize ways to make their content more legitimate by crafting engagement through fake accounts and targeted dissemination. This involves creating fake profiles on platforms like X and TikTok to spread antisemitic messaging and boost engagement. Tactics include replying to highly followed accounts, creating artificial engagement on posted content, and engaging users in conversation through direct messages. There is a noticeable meticulous monitoring of engagement metrics such as likes, shares, and comments to gauge the effectiveness of their dissemination efforts.

(3) Fake Accounts:

Extremists advocate for the creation of fake accounts on social media platforms for the purpose of narrowcasting and authentic engagement. Discussions on far-right spaces outline strategies for building convincing fake profiles tailored to targeted demographics and boost engagement on content. This tactic highlights the deliberate manipulation of online identities to propagate extremist narratives.

(4) Exploiting AI for Meme Creation:

Extremists are leveraging AI technology to create memes containing subversive messages with the potential to go viral. Tutorials on platforms like 4chan encourage users to utilize AI-generated images for “meme warfare,” viewing them as powerful tools for radicalization. The proliferation of strategies to exploit AI tools within far-right communities, along with the development of bespoke software underscores a concerted effort to weaponize the technology for ideological dissemination.

In the second section of the paper, we show the culmination of the “meme warfare” tactics, shedding light on the escalating use of GAI by far-right extremists to strategically disseminate their memes beyond their own spaces. This strategy has proven successful in expanding the reach of their content to broader audiences on mainstream social media platforms such as X and TikTok. We illustrate how this content inherently normalizes offensive narratives and dehumanization, with the ultimate aim of “red-pilling” individuals towards extremist ideologies.

Our content analysis highlights a troubling trend: far-right extremists are increasingly utilizing AI tools to swiftly and effectively produce memes, thereby weaponizing meme warfare as a pivotal component of their strategies.

Through the examination of four case studies, we present the rapid far-right response to current events, wherein users exploit AI technology with alarming efficiency to propagate antisemitic values through content tailored for mass appeal. We demonstrate their meticulous planning to maximize reach and content curation for their target audience. This strategy yields tangible results: content originating from fringe spaces reaches audiences numbering in the tens of millions, exposing them to dangerous narratives and harmful stereotypes. Notably, these memes also go beyond the screen, manifesting in the physical world.

These memes can be widely distributed and reach thousands of people. One such example is a meme featuring Maya Regev, an Israeli hostage held by Hamas terror organization in Gaza following the attacks on October 7, 2023. She was released on November 25. A Pixar-style poster mocking Maya Regev was generated by a user on 4chan, depicting her in a romanticized dynamic with her captor. This image became widely distributed, particularly on platforms like Twitter and TikTok. Jackson Hinkle himself also posted the image, where it gained over 4.7 million views as an example of the reach such content can achieve.

These examples underscore how GAI has revolutionized meme warfare for far-right groups, eliminating barriers of time, skill, and resources. From Lego sets to Disney posters to naval blockades, far-right users demonstrate their adaptability and proficiency in navigating shifting trends and events, ensuring their messaging resonates widely. This evolution in content creation underscores their growing political influence across the digital landscape, emphasizing the imperative for vigilant responses and regulatory measures from social media platforms, along with proactive action taken by policymakers.

[1] Koblentz-Stenzler, L., & Klempner, U. (2023, November). Navigating Extremism in the Era of Artificial Intelligence. International Institute for Counter-Terrorism. https://ict.org.il/navigating-extremism-in-the-era-of-artificial-intelligence/

[2] Meme warfare – the use of slogans, images, and video on social media for political purposes, often employing disinformation and half-truths. (Donvan J., “How memes got weaponized: A short history”, MIT technology review, October 24, 2019, at https://www.technologyreview.com/2019/10/24/132228/political-war-memes-disinformation/ )

[3] “in an extremist context it means one has bought into at least one trope of the far-right movement” – from ADL Glossary of Hate

[4] Munson Olivia, “What is a meme? They can be funny and cute or full of misinformation. We explain”, USA Today, October 13 2022, at https://www.usatoday.com/story/news/2022/10/13/what-is-a-meme-definition-examples/8074548001/

[5] Ascott Tom, “How memes are becoming the new frontier of information warfare”, Australian Strategic Policy Institute, February 19 2020, at https://www.aspistrategist.org.au/how-memes-are-becoming-the-new-frontier-of-information-warfare/

[6] NCTC, DHS, FBI, “First Responders ToolKit”, July 13, 2022, at https://www.dni.gov/files/NCTC/documents/jcat/firstresponderstoolbox/128S_-_First_Responders_Toolbox_-_Use_of_Memes_by_Violent_Extremists.pdf

[7] GIFCT. (2023). Borderline Content: Understanding the Gray Zone. https://gifct.org/wp-content/uploads/2023/06/GIFCT-23WG-Borderline-1.1.pdf

[8] Munn, L. (2019). Alt-right pipeline: Individual journeys to extremism online. First Monday, 24(6). https://doi.org/10.5210/fm.v24i6.10108 ; Cauberghs, O. (2023, November 13). For the Lulz?: AI-generated Subliminal Hate is a New Challenge in the Fight Against Online Harm. GNET. https://gnet-research.org/2023/11/13/for-the-lulz-ai-generated-subliminal-hate-is-a-new-challenge-in-the-fight-against-online-harm/

[9] Koblentz-Stenzler Liram and Pack Alexander, “American Far-Right – Trump Supporters Face Threat of Radicalization by White Supremacists”, ICT, January 22, 202, at https://ict.org.il/american-far-right-trump-supporters-face-threat-of-radicalization-by-white-supremacists/

[10] Koblentz-Stenzler Liram, “Infected by Hate: Far-Right Attempts to Leverage Anti-Vaccine Sentiment”, JSTOR, March 1 2021, https://www.jstor.org/stable/resrep30926?seq=1

[11] Weimann Gabriel, New Terrorism and New Media, Wilson Center, Research Series vol 2, p.3, at https://www.wilsoncenter.org/sites/default/files/media/documents/publication/STIP_140501_new_terrorism_F.pdf

[12] A term referring to what is within the range of tolerated opinions within mainstream discourse

[13] A mainstream “normal” person

[14] “Jewish Question” – the question of how to rid of Jewish people in society

[15] Muchnick, J., & Kamarck, E. (2023, November 9). The generation gap in opinions toward Israel | Brookings. Brookings. https://www.brookings.edu/articles/the-generation-gap-in-opinions-toward-israel/

[16] Koblentz-Stenzler, L., & Klempner, U. (2023, November). Navigating Extremism in the Era of Artificial Intelligence. International Institute for Counter-Terrorism. https://ict.org.il/navigating-extremism-in-the-era-of-artificial-intelligence/

[17] Lee, T., & Kolina, K. (2023, October 6). The Folly of DALL-E: How 4chan is Abusing Bing’s New Image Model. Bellingcat https://www.bellingcat.com/news/2023/10/06/the-folly-of-dall-e-how-4chan-is-abusing-bings-new-image-model/

[18] Mishra, A., & Karumbaya, V. (2024, February 15). A deadly trifecta: disinformation networks, AI memetic warfare, and deepfakes. GNET. https://gnet-research.org/2024/02/15/a-deadly-trifecta-disinformation-networks-ai-memetic-warfare-and-deepfakes/

[19] Koblentz-Stenzler, L., & Klempner, U. (2023, November). Navigating Extremism in the Era of Artificial Intelligence. International Institute for Counter-Terrorism. https://ict.org.il/navigating-extremism-in-the-era-of-artificial-intelligence/

[20] Koblentz-Stenzler, L., & Klempner, U. (2023, November). Navigating Extremism in the Era of Artificial Intelligence. International Institute for Counter-Terrorism. https://ict.org.il/navigating-extremism-in-the-era-of-artificial-intelligence/

[21] Carter, C. (2024, January 16). Far-right figures are spreading antisemitism and conspiracy theories in response to news of a tunnel under a New York synagogue. Media Matters for America. https://www.mediamatters.org/antisemitism/far-right-figures-are-spreading-antisemitism-and-conspiracy-theories-response-news#paragraph–section-heading–3456382

[22] Tunnel Discovered Under Chabad Headquarters Sparks Antisemitic Firestorm Online. (2024, January 11). ADL. www.adl.org/resources/blog/tunnel-discovered-under-chabad-headquarters-sparks-antisemitic-firestorm-online

[23] The Houti are Iran’s proxy terrorist organization in Yemen (Riedel Bruce, “Who are the Houthis, and why are we at war with them?”, Brookings, December 18, 2017, at https://www.brookings.edu/articles/who-are-the-houthis-and-why-are-we-at-war-with-them/ )

[24] Webber, N. (2013). “Grief Play, Deviance and the Practice of Culture”. In Exploring Videogames. Leiden, The Netherlands: Brill. https://doi.org/10.1163/9781848882409_017

[25] Hinkle embodies the merging of far-right and far-left politics in his approach to the Israel-Hamas conflict, identifying himself as an “American Conservative Marxist-Leninist”.

[26] Klee, M. (2023, November 1). Verified hate speech accounts are pivoting to Palestine for clout and cash. Rolling Stone. https://www.rollingstone.com/politics/politics-features/twitter-hate-speech-accounts-palestine-clout-1234867382/

[27]Amnesty International. (2022). Human rights in Yemen. https://www.amnesty.org/en/location/middle-east-and-north-africa/yemen/report-yemen/

[28] Weinberg, D. (2020, November 20). Why Do Houthis Curse the Jews?. ADL https://www.adl.org/resources/news/why-do-houthis-curse-jews

[29] Sweat, Z. (2023, November 7). Fake Disney-Pixar movie posters are a massive meme trend online, but some are stirring up controversy. Know Your Meme. https://knowyourmeme.com/editorials/meme-review/fake-disney-pixar-movie-posters-are-a-massive-meme-trend-online-but-some-are-stirring-up-controversy

[30] Growcoot, M. (2023, November 20). Microsoft AI image Generator blocks ‘Disney’ after viral movie poster trend. PetaPixel. petapixel.com/2023/11/20/microsoft-ai-image-generator-blocks-disney-after-viral-movie-poster-trend/

[31] Romano, A. (2018, January 3). Logan Paul, and the toxic prank culture that created him, explained. Vox. https://www.vox.com/2018/1/3/16841160/logan-paul-aokigahara-suicide-controversy

[32] Klempner, U. (2023, October 23). Emojis of Terror: Telegram’s role in perpetuating extremism in the October 2023 Hamas-Israel war. GNET. https://gnet-research.org/2023/10/23/emojis-of-terror-telegrams-role-in-perpetuating-extremism-in-the-october-2023-hamas-israel-war/

[33] Growcoot, M. (2023, November 20). Microsoft AI image Generator blocks ‘Disney’ after viral movie poster trend. PetaPixel. petapixel.com/2023/11/20/microsoft-ai-image-generator-blocks-disney-after-viral-movie-poster-trend/

[34]Conzo, P., Taylor, L. K., Morales, J. S., Samahita, M., & Gallice, A. (2023). Can ❤s Change Minds? Social Media Endorsements and Policy Preferences. Social Media + Society, 9(2). https://doi.org/10.1177/20563051231177899

[35] Soral, W., Bilewicz, M., & Winiewski, M. (2017, September). Exposure to hate speech increases prejudice through desensitization. Aggressive Behavior, 44(2), 136–146. https://doi.org/10.1002/ab.21737

[36] Langenkamp, M. (2021). When Hatred Becomes Mundane: Desensitization After Repeated Exposure to Hate Speech [University of Oslo]. https://www.duo.uio.no/bitstream/handle/10852/89994/Master-Thesis_Candidate-2_Maren-Langenkamp.pdf?sequence=11&isAllowed=y

[37] Hume, T. (2023, December 21). An Antisemitic Fire Extinguisher Attack on a Menorah Has Become a Global Far-Right Meme. Vice. https://www.vice.com/en/article/n7e54k/grzegorz-braun-antisemitic-fire-extinguisher-meme

[38] Schefcik, D. (2023, November 7). The AI Revolution: How Artificial Intelligence Is Impacting the LEGO Community – BrickNerd – All things LEGO and the LEGO fan community. BrickNerd – All Things LEGO and the LEGO Fan Community. https://bricknerd.com/home/the-ai-revolution-how-artificial-intelligence-is-impacting-the-lego-community-11-7-23

[39] Callahan, S. P., & Ledgerwood, A. (2016). On the psychological function of flags and logos: Group identity symbols increase perceived entitativity. Journal of personality and social psychology, 110(4), 528–550. https://doi.org/10.1037/pspi0000047

[40] Ibid.

[41] Scully, A. (2021, October 10). The Dangerous Subtlety of the Alt-Right Pipeline. Harvard Political Review. https://harvardpolitics.com/alt-right-pipeline/